This article includes mention of gender-based violence, domestic violence, stalking, misogynistic language, and online and in-person harassment.

Last year, the founder of Gender Rights in Tech (GRIT), a South African NGO, recounted a story of a young woman who, after declining a man's romantic proposal, endured two years of escalating stalking and harassment. The man broke into her home, assaulted her friends, and targeted her family.

According to the story, the sustained abuse left the young woman with a stress-induced heart condition and afraid to leave her home despite having a protection order and an open criminal case. Although the chief prosecutor assured her that a warrant would be issued, police took no further action and made critical errors that marred the investigation, revealing a systemic failure to enforce the very laws intended to protect her. Unfortunately, this story is all too common.

Nearly 1 in 3 women worldwide—approximately 736 million—have experienced physical or sexual violence at least once in their lifetime. This figure does not include sexual harassment. This violence is associated with at least eight adverse health outcomes that often persist throughout life, demanding sustained and multisectoral interventions.

Efforts to address gender-based violence (GBV) are hindered by a critical lack of robust, high-quality data needed to understand where, why, and how often the violence occurs. Despite its global scale, GBV remains underreported and undermeasured, particularly in low- or middle-income countries. The 2023 Global Burden of Disease study reveals that 62.3% of countries have had fewer than five data sources on intimate partner violence over the 33 years from 1990 to 2023.

Nearly 1 in 3 women worldwide have experienced physical or sexual violence at least once in their lifetime

Multiple factors contribute to persistent gaps in GBV data, including stigma, fear of retaliation, and weak reporting systems that discourage disclosure. Many low- or middle-countries also lack the resources or capacity needed to conduct regular, population-based surveys. The scarcity of data raises concerns about the accuracy and representativeness of global violence statistics, compelling policymakers to make assumptions that hinder effective resource allocation and priority setting for violence prevention and response efforts worldwide.

This year, these data gaps have deepened as a result of the U.S. freeze on foreign aid. The February decision put an indefinite pause on the Demographic and Health Survey (DHS). Run by the U.S. Agency for International Development (USAID), the survey is a key source of nationally representative GBV data from more than 25 countries.

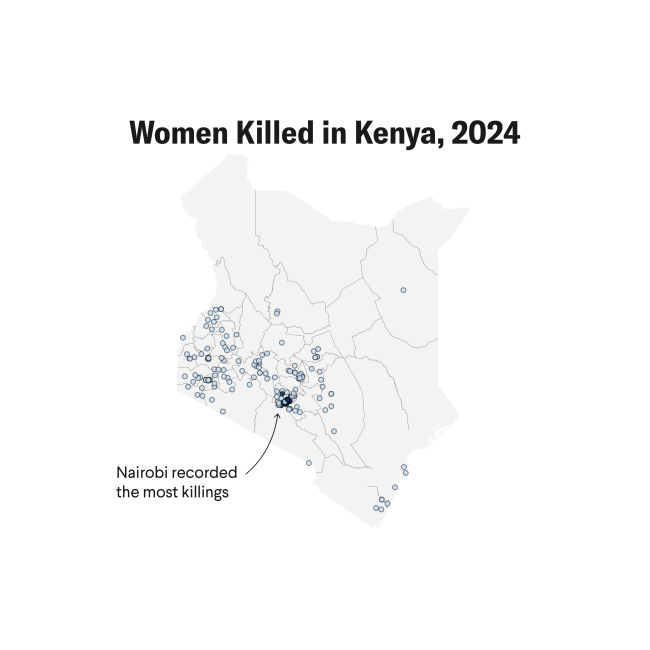

Sub-Saharan Africa, which has historically borne the highest global burden of GBV, faces severe data gaps, 11 countries relying on just a single data source that met quality standards. Five of these countries rely exclusively on DHS data. This situation underscores the fragility of current systems and reinforces the need for a fresh approach to GBV data—including through artificial intelligence (AI). AI offers opportunities to fill in these gaps and enhance traditional data systems, enabling more accurate and real-time insights into GBV, including identification of emerging trends and patterns to inform targeted interventions and policies.

Using Artificial Intelligence to Track and Analyze GBV

Innovative tools such as mobile apps, anonymized digital platforms, and community-led reporting systems are reshaping how GBV is monitored and addressed, offering more responsive and accessible alternatives to traditional methods. GRIT's mobile app and AI chatbot, codesigned with survivors, have supported more than 150 survivors in the past three years by enabling confidential reporting, offering legal guidance, and connecting users with court and postviolence care.

According to GRIT, one survivor of intimate partner violence used the GRIT app on four occasions during violent episodes over the previous year. Each time, they activated the emergency response feature, which, with their consent, captured crucial information for potential legal use. The app allowed them to securely store audio recordings of emergency calls, document their experiences, and receive detailed reports from armed responders. It also enabled them to preserve key evidence, such as logging hospital visits, for potential legal action. This case illustrates how digital tools can empower survivors to safely and systematically document their experiences, improving the quality and usability of GBV data.

Additionally, AI and generative AI offer powerful opportunities to further enhance GBV monitoring and response. These technologies can process large-scale, unstructured datasets, enabling the identification of patterns and correlations that could otherwise remain hidden. Natural language processing (NLP) enables real-time detection of harmful content, including misogyny and harassment, across digital platforms. Traditional data collection methods often trail the rapid evolution of online discourse, particularly on social media platforms.

Plan International's report "Free to Be Online?" [PDF] highlights the pervasive nature of online abuse that adolescent girls worldwide face. The report documents numerous instances of explicit threats to girls made in public comments, such as:

"When you leave your house, I'm going to make you feel scared and harass you and stalk you while you walk out."

"I know where you live."

"It's just a societal thing. It's [the] way things roll, you know what I'm saying. After I write this message, it's my misogynistic duty to go outside and harass women."

These instances highlight the normalization and severity of online harassment, which often mirrors offline gender-based violence

These instances highlight the normalization and severity of online harassment, which often mirrors offline gender-based violence. Individuals also use digital platforms to share personal experiences and report abuse, contributing to potential datasets for analysis. NLP techniques can effectively identify both explicit and implicit forms of violence, revealing trends and sentiments that traditional surveys might overlook. For example, a study on online misogyny in India used NLP to track abusive language on X during COVID-19, showing how social media discourse reflects broader societal dynamics. This type of real-time analysis can uncover what forms of gendered harm are being normalized or challenged. It can also provide evidence needed to update and reform legislative frameworks and develop effective enforcement mechanisms to prevent and respond to online violence.

Generative AI for Trauma-Informed Interviewing

As technology increasingly shapes how GBV is both experienced and reported, it also opens new avenues for more scalable and survivor-centered data collection. No universally accepted gold standard for collecting data on GBV is in place, presenting added challenges of inconsistent information and data collection practices in addition to data sparsity. Traditional face-to-face methods can unintentionally harm survivors by increasing the risk of retraumatization, stigma, or retaliation, and are often too costly to implement widely or regularly.

Generative AI can be a cost-effective approach that prioritizes survivor safety, confidentiality, and autonomy. By enabling self-administered, adaptive digital surveys, generative AI can offer a safe environment for survivors to share their experiences at their own pace, without the potential for risks due to the direct involvement of a human interviewer. These tools can be designed to use trauma-informed language, provide real-time emotional support or resources, and adjust questions dynamically based on responses, helping minimize distress and reduce the likelihood of retraumatization.

Although the application of generative AI in GBV research is still emerging, it has already been used across various other health domains. For example, Surgo Health—a technology company and public benefit corporation focused on improving health care by understanding the diverse factors influencing individual behaviors—used a generative AI personalized interview platform to explore the complex drivers of vaccine hesitancy in the United States during the COVID-19 pandemic, converting narrative responses into behavioral segments that informed targeted public health strategies. Applying this methodology to GBV would allow researchers to quantify nuanced factors such as stigma, fear, or institutional mistrust, and analyze their relationship to outcomes such as service uptake or policy impact.

As technologies evolve, ethical and survivor-centered practices are essential. Guidelines from organizations like the Sexual Violence Research Initiative—a leading global network advancing research on violence against women, children, and other forms of gender-based violence—and the MERL Tech Initiative—a social venture supporting ethical, tech-enabled program design, implementation, and monitoring, evaluation, research, and learning—provide frameworks for the responsible use of AI in GBV research.

The development and deployment of these technologies require strict data privacy protection, and personal or identifiable data should never be included in generative AI systems. This practice helps mitigate privacy risks and ensures ethical data handling.

Tools should be developed through culturally responsive design, integrating the languages, worldviews, and lived experiences of the survivors they serve. This approach ensures that design reflects and respects the diverse cultural contexts of users, fostering inclusivity, making systems relevant, and reducing biases in design and development. Survivor-centered protocols are essential to ensure AI systems do not cause harm or reinforce existing power imbalances.

With thoughtful implementation, AI can help shift GBV monitoring from reactive to proactive, closing data gaps, amplifying survivor voices, and supporting more timely and targeted interventions.

AUTHOR'S NOTE: All statements and views expressed in this article are solely those of the individual authors and are not necessarily shared by their institution.